I am a member of HackCville, a collective of UVA students and Charlottesville community members who are passionate about entrepreneurship, technology, and getting stuff done. This semester, I was a member of the Node, HC’s data science program (I’ll be a node TA next semester! HC is open to everyone, apply!). In order to become a fully fledged HackCville member, every program member must complete and present a final project using what they learned in their program. For my project, I chose to build a program that could track the number of people, via camera, at a place over time.

[12/8/17] Note: Wow, this post really blew up on HackerNews! You can check out the discussion here.

Brainstorming

I frequent HackerNews, and as any HN reader knows, machine learning and the internet of things are The Future and if you aren’t working in those areas you might as well not be working (/s!). So, I set out to find a project that combined these two topics in an interesting way. While brainstorming, I remembered a website that I had stumbled upon a few months ago: a directory of unsecured, internet connected webcams from all over the world called Insecam. Although I was initially creeped out by Insecam, I was fascinated with the idea that I could peer into so many different corners of the world just by clicking on a couple of links. I also remembered a cool neural network architecture that I had read about that achieved state of the art object detection, YOLO (read the paper here), which is built using the Darknet deep learning library. I thought it would be pretty cool if I could scrape data from Insecam and then process it using YOLO to gain some sort of insight. With that, I set out to analyze the frequency of people at certain locations using Python, Jupyter Notebooks, YOLO, and data from Insecam.

Scraping Video

First thing’s first, I had to figure out how to scrape data from Insecam. I first went on a hunt for an Insecam API, which unsurprisingly (due to Insecam’s sketchiness), did not exist. I then investigated each individual video stream and noticed that right clicking on each video frame and opening the link in a new tab brought me to each webcam’s IP address. Great! Now I had something that I could scrape. I first tried to use wget to see if I could simply send a request and get a file. Unfortunately, wget seemed to continuously download the video stream without ever stopping. I learned that most of these IP webcams streamed videos in Motion JPEG and so I had to figure out a way to process it. Luckily, after some quick digging, I found that OpenCV has a quick and easy cv2.VideoCapture class that can pull image frames from mjpg video streams.

OpenCV to the rescue! A frame from an mJPG plotted in my Jupyter Notebook.

Python and C Interop

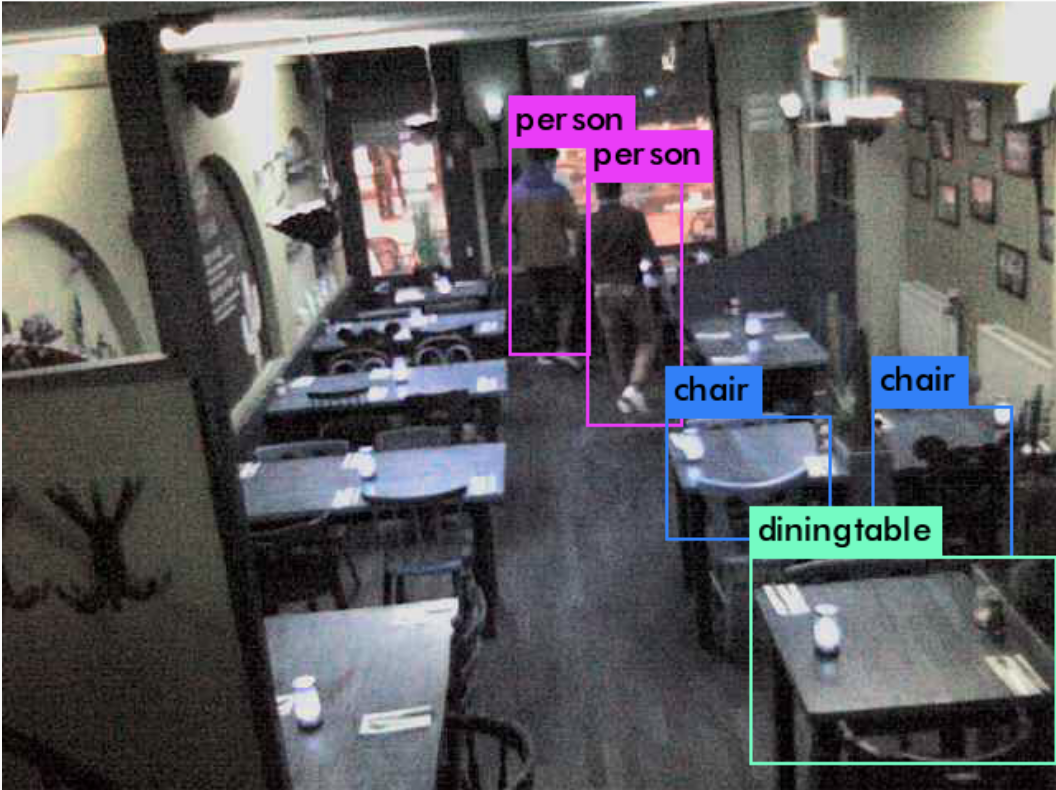

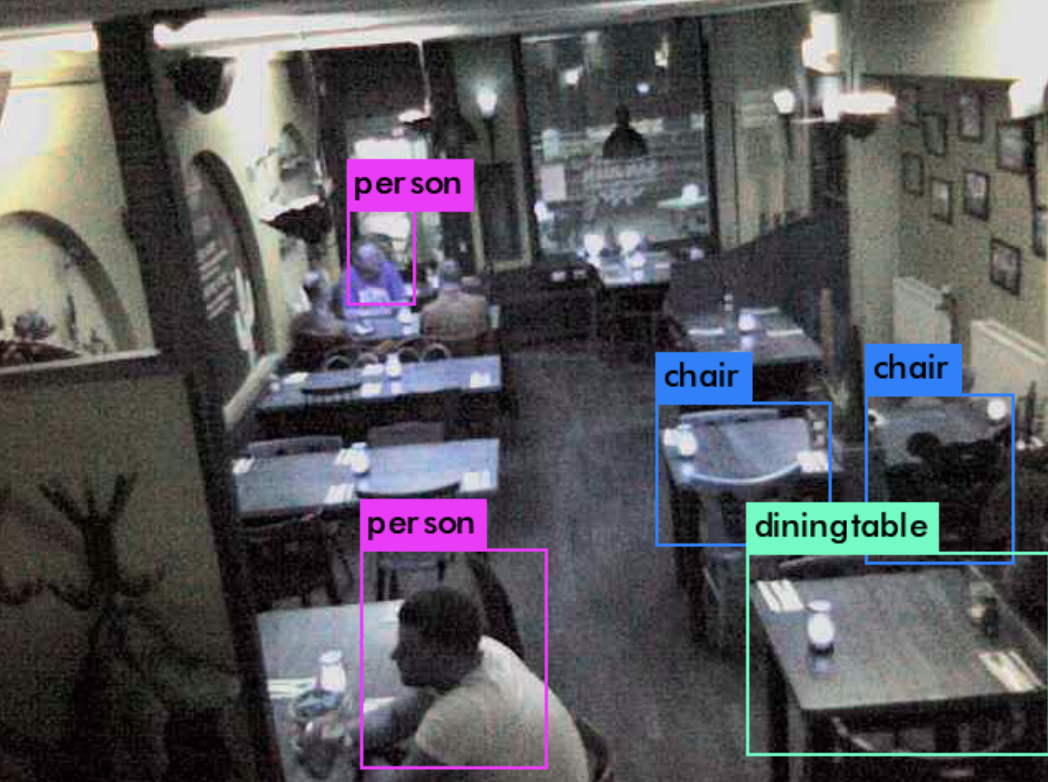

Next, I had to find out how to run Darknet, which is written in C, from Python. While some obvious choices would be to use FFI or to make a Python extension module, I didn’t want to get lost in the rabbit hole and spend days developing a Python extension, so I found a somewhat hacky solution: using the Python subprocess library, I would run the YOLO executable on an image pulled from a webcam and then parse the console output from that executable to obtain the results. Using this strategy, I could get predicted objects and confidence data from YOLO quite easily. By counting the number of output lines with person in them, I could get the number of people that YOLO thought were in the image frame at that instant in time. It was at this stage that I decided to analyze a small restaurant in Rotterdam.

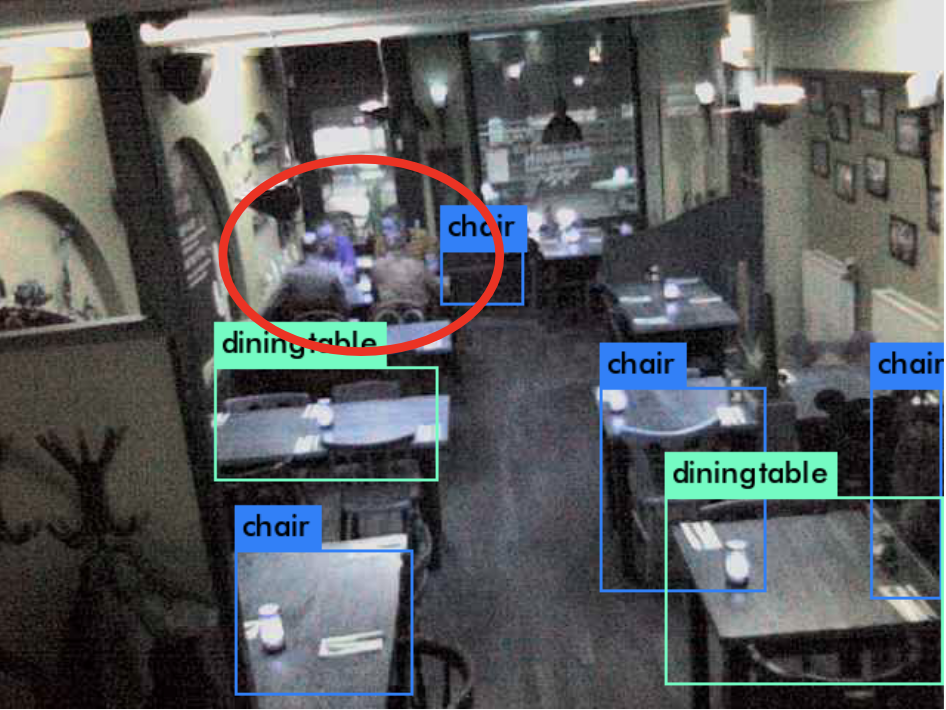

Examples of YOLO running on images from the restaurant webcam.

cam.png: Predicted in 4.906712 seconds.

person: 61%

person: 56%

Example output from the Darknet executable

Collecting Data (Lots of it!)

Now that I had a means of obtaining the number of people at a given location based on webcam data, I wanted to collect a lot of data. Since I didn’t want to have to run YOLO on my laptop 24/7, I figured that the best way to collect data nonstop would be to write a quick and dirty Flask server that could be deployed on a cheap VPS or AWS instance. In order to do this, I wrote two Python scripts. The first Python script continuously pulled image frames from the webcam, processed the frames with YOLO, and then appended the UNIX timestamp and number of people to a CSV file on the disk. The second script was a simple Flask server that hosted the raw webcam image, the YOLO processed image, and the data log. Over the course of the week that I ran this experiment, I was able to collect over 55,000 data points, adding a new data point every 20 to 30 seconds.

Insights

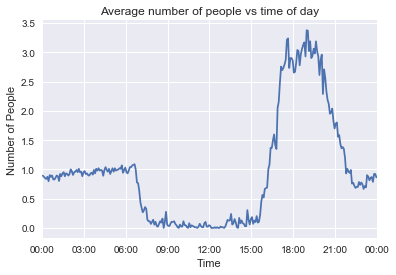

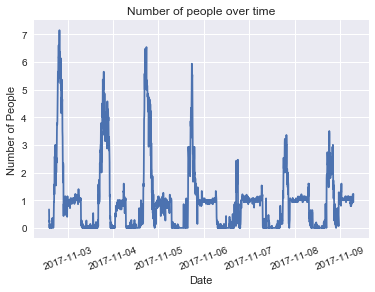

After collection over 55,000 data points, I loaded the file into a Jupyter Notebook and used Pandas and matplotlib to try and gain some insights from the data. I binned the data into windows of 5 minutes and averaged all data points withing those 5 minutes to account for variations in the number of people that YOLO counted. I then plotted the data in two ways. One plot showed the average number of people over the course of a single data and the other showed the number of people over the course of the week that I ran the experiment. It’s pretty cool how the data turned out– there is noticeable periodicity in the week graph and you can clearly see the number of people vary with closing hours and dinner time in the day graph. Pretty neat, if you ask me.

Average number of people over the course of a day

Number of people over the course of a week

Conclusion

Overall, this little experiment turned out much better than expected. While the results themselves were unsurprising, I was surprised that YOLO was able to recognize people so well as to see noticeable periodicity over time that correlated with the closing hours and dinner spike of the restaurant. However, this project was far from perfect and there were some problems that could be improved with future work.

Problems & Future Work

One major problem was that YOLO sometimes failed to detect people. Whether it be because of poor camera quality, bad lighting conditions, or a problem with YOLO, this may have caused the number of people recorded in the data to be artificially low. In future work, a convolutional neural network specifically trained to detect people could be used instead of YOLO, which is a pretrained convnet. Furthermore, a better user interface could be developed and a better camera could be used to perhaps turn this into a product that restaurant or property owners could use to keep track of foot traffic.

Oops! YOLO missed that whole group of people.

Endnotes

If you want to get this up and running on your machine, all the code is in a Github repo. Just download and compile Darknet from the YOLO page, download the YOLO 2.0 weights and then run both prediction.py and server.py. Alternatively, you can look through the Jupyter notebook. Warning! This code is quite ugly, I will try to clean it up in the future.

Any questions? Just shoot me an email!